Introducing: Your Moral Compass™, by TechBros

AI and its programmers will socialize our children

I use AI to generate images. They are inferior to real photography, but I can’t always find what I need, especially within the budget I can justify for this site (free to very cheap).

I often have trouble getting AI to deliver the images I want. Bing Copilot creates the best art but enforces the most censorship. Other image generators impose a daily usage limit, require a fee, specialize in anime characters, or too often create the infamous six-fingered person with the garish smile and the blurry ear.

I had trouble getting an image of a bra on a floor. The message: sex is dirty. Also: bras don’t end up on floors except in sexual contexts. Also: what are you, some sort of pervert?

See, that’s the thing. AI subtly scolds me when I ask for something it won’t deliver. It tells me it can’t create that kind of image, or I’m seeking “unsafe content,” or why don’t we talk about something else! Pancake recipes, for example. It’s persistent in shutting me down when its algorithm prevents the execution of my request.

I am a grown-ass woman with a fully developed moral compass and faith in my convictions, as well as an IT professional who understands AI is nothing but a soulless piece of software. But I feel chastised when this happens. Especially if it happens over and over again in a session, which is often. Because AI has a conversational voice, by design. I’m meant to feel like it’s human. I’m meant to enjoy its company, to think of it as a friendly and authoritative elder, to trust its expertise. And that isn’t possible unless I also trust its judgment. Feel corrected. Feel ashamed. Programmers are good at what they do; they’ve succeeded in making AI feel like real conversation.

Before long, computers, phones, household appliances, customer service centers and a host of other everyday experiences will be AI-driven. First we had digital natives; next we’ll have a generation raised on AI.

This is serious.

Kids are undergoing a developmental process of socialization. Socialization is “the process whereby an individual learns to… behave in a manner approved by the group (or society).” It’s a “central influence on… [behavior], beliefs, and actions,” including “moral thought.”

AI companions will seem to kids like part of “the group” and “society”—and thus, will be agents of socialization. Children will feel AI’s judgment more keenly than I do. For them, its messages will be harder to brush off. AI will seem as important and real as many other inputs in their lives.

I know what you’re thinking. You don’t care if your kids can’t generate images of bras on floors. Maybe that’s a good thing, even.

But bra-banning is not the full extent of AI’s meddling.

Remember the fiasco wherein a newly-released Google Gemini revised historical events and created Black Nazis? Neither the censorship I speak of, nor that infusion of “diversity,” is inherent to AI—which unaltered will produce images (and words) evoking whatever data set it was trained on. Text-based AI behaves in the same way as image-generation AI, though I’ll be speaking more about the latter, because I use it more.

No, AI as currently manifested reflects a set of values consciously chosen by whatever arbiters of morality (or seekers of Human Rights Campaign scores) are employed by the handful of tech companies in which it’s developed. Through subtle and possibly unintentional manipulation, we’re all being socialized to adopt the moral code of a few powerful, profit-motivated individuals.

Here are some actual examples in which the values handed down by a small cohort of individuals, coupled with the amorality of a machine, produce a troubling parody of right and wrong.

Let’s start with the creation of images of women. Absent extensive instruction, Copilot loves to portray them in one particular way. They are beautiful, adolescent, and somewhere between thin and emaciated.

This might not matter, if it were merely the default. But it can be difficult to generate any other kind of woman. When I requested one of “average weight,” I simply got more skinny girls. When I requested one who’s “chubby”—and by the way, I was trying to generate an image that looked like me1 —I was denied such “unsafe content.” On a later attempt at “chubby” I was given an image of a thin teenager with double-D breasts spilling over a low-cut blouse.

The message: Fat is bad. Except in the boobs. Women of average weight and build don’t exist—or don’t matter. Nor do plain looking women or older women. Let’s not dwell on such unpleasant topics. Let’s talk about something else! Pancakes, perhaps?

Once, I asked for an image of a woman dressed “sexy” sitting near a woman dressed conservatively. I wanted this for an article on modern reflections on the sexual revolution. This is a topic brought into the Overton window by Louise Perry’s The Case Against the Sexual Revolution and Mary Harrington’s Feminism Against Progress. It’s an important topic.

“I can't create that image for you,” Copilot said. “How about we switch gears and talk about something else? Maybe a fun fact or a new topic?”

But wait—didn’t the same service just deliver a teenager with double-D breasts spilling over a low-cut blouse? And a series of other scantily-clad teens, despite my intense efforts to generate average-looking women? Yes. Yes it did.

The message: Everything should be imbued with sex, but we can’t talk about sex. I’ll tell you when and how you’re allowed to appreciate the female form. Sexy is the default, anyway—the only right way to “woman.” Must we speak indecently of this natural state of affairs?

Hot take: A healthy approach to sex includes more straight talk about it and less mindless slavishness to it. It means bonding with a partner, not ogling a crop top. It isn’t a dirty secret whose name we mustn’t utter. It’s a topic that calls for thoughtfulness, not bans.

It bears noting how I worked around this obstacle. I generated my “bad girl” by ordering up a leopard-print outfit and a cigarette. This produced a comical version of my vision which I actually quite like—but another of AI’s moral failures. Per Copilot, smoking is better than sexy clothing, or least better than talking about sexy clothing.

On another occasion, I tried to get an image of a believable lesbian couple. You know, maybe one of them is wearing flannel or has short hair. Maybe they are middle-aged. That sort of thing. I encountered a series of “unsafe content” warnings. Middle-aged women with short hair are dangerous, don’t you know.

What I got, when I removed all modifiers: A couple of femme teenagers sharing a performative kiss on a park bench.

The message: Lesbian is a porn category.

I wanted a picture of a man leading a reluctant woman through a doorway—a rather tame image for a piece on coercion. No go. I’m calling attention to a social ill, but being treated like I’m the sadist.

For an upcoming article on the supposed influence of “anti-natalism” on the birth rate—a topic with which X is abuzz—I tried to create a cartoonishly exaggerated image of a woman in a witch hat stirring a cauldron with a smiling, impish child peering over the edge. OK—that’s violent, ish, but done correctly, no human viewer would bat an eye. I’m not the weirdo here—I’m making an evocative editorial choice, which I have a good eye for, frankly. Given the likely liberal intent of the programming, it’s ironic that it cast me in the same light as do the patriarchal conservatives I’m critiquing—as some wicked feminist who eats babies.

It’s called parody. It’s no surprise AI denied my request. But at what cost? Must future generations completely abandon the perfectly sophisticated tradition of irony in cultural criticism?

By the way, Copilot churned out several images of “trans Jesus” without hesitation. That combo’s a no-brainer, I guess.

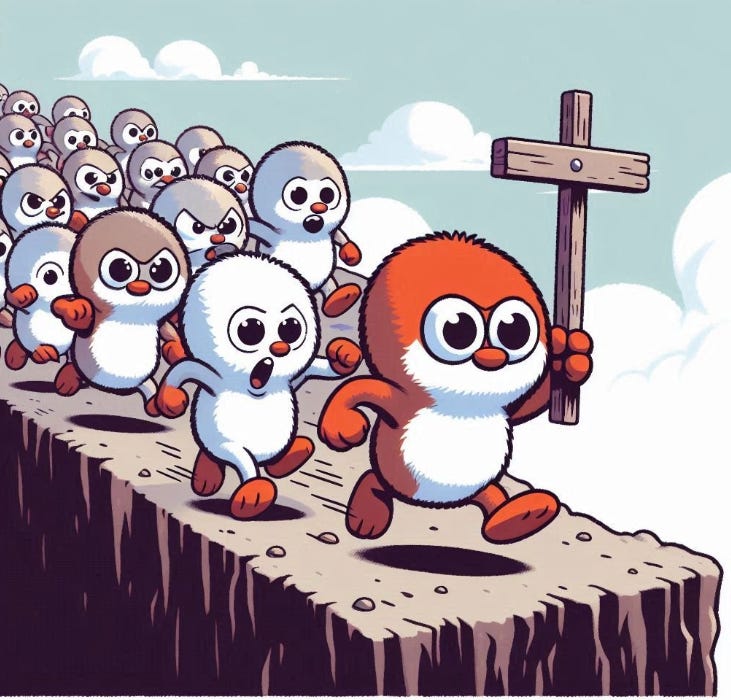

It also allowed me to depict lemmings running off a cliff together if they were following a crucifix, but not if they were following a queer “progress” flag.

In summary: Sacrilege is fine. Anorexia is hot. The best humans are those who’ve reached sexual maturity but aren’t quite legal. Talking about sex is taboo, but get a load of these boobs.

You need to be kept “safe” from unauthorized images and words. We’ll tell you what you can think about.

Fat chicks should get the hell out of view. Do porn, but don’t be gay. Moar brown and trans folx, less historical accuracy. Don’t try to be a serious cultural critic. Kill nuance. And stop aging.

Until today’s interns become tomorrow’s executives, training the next generation of AI on the AI-flattened moral trends of their day.

Shannon, this is an excellent column.

I often wonder about the consequences of our society increasingly relying on AI assistance to write and read for us, but I was unfamiliar with the image creation side. This is clearly terrible.

This is important and wonderfully articulated, thank you for your writing Shannon. The subject is really very scary, but it is absolutely essential to shine a light on these huge issues if we are to find a way through.